Hi there. Even though, I wanted to write about electronics for a while, it did not happen again, unfortunately. Today's topic is configuration that took me two weeks. More precisely creating an initrd. So, why do I need such a thing?

Let me start from LVM first. Although I had bad experiences with it at first, I've gotten used to it so much since 2014, that it's now unimaginable to have a linux installation without it. I do not have partitions spanning multiple disks, but I think LVM's most useful feature is its flexibility in moving and resizing partitions.

Linux from Scratch (LFS) project is on the other side of this subject. It is a project, which allows users to compile necessary tools and linux kernel from scratch i.e. from their source code, in order to build a working linux environment. It starts with a working compiler of course. After compiling gcc as a cross compiler, basic tools are compiled with it and a minimal chroot (change root) environment is created. Linux kernel is compiled at the last step and made bootable with grub. I got to know about this project from Viktor Engelmann's videos. I will follow a different path here from his. We both are actually following slightly different paths from official LFS documentation (or "the book" in LFS terminology) but they are both based on the book in the end. A few steps that I will follow, will be from the (B)eyond LFS book.

There are actually two versions of the book, systemd and initd versions but they are basically same up until the chapter 8: Packages. This post is based on the eleventh version of the book for both systemd and initd. And there are two different formats of the book for each version under "Download" and "Read Online" in website. Although the .pdf file in Download page looks more compact, copying and pasting command is definitely easier from the .html version. Btw, most of the time in this project is spent compiling packages (takes around 16h on my machine to compile it entirely, excluding tests). Therefore, I will only mention the steps which I didn't follow from the book.

Note that the hyperlinks in this post are linked to the latest version of the book as of the date of writing. When a more recent version than v11 is released, some links may lead to different pages.

System Requirements

The book says that a 10 GB partition would be enough to compile all packages, but a 30 GB partition is recommended for growth. The disk usage had exceeded 20 GB while trying to compile Fedora kernel because too many kernel features are enabled.

I will install the OS on the first disk of a two-disk virtual machine (VM) and compile packages on the second disk. The advantage of this is that I do not risk to corrupt the disk of my own (host) system in case I run a command on the host instead of chroot environment, accidentally. Compiling code requires high disk performance. Therefore, working on SSD is highly recommended. VM will give an IO performance close to the IO performance of its host. Another advantage of working in a VM is that it can provide an isolated environment even if the physical disk doesn't have enough free space to allocate a dedicated partition.

In his videos, Viktor Engelmann downloads and compiles the packages on an USB stick. I personally don't find this logical because even spinning disks can provide more IOPS than USB sticks. Therefore working on an USB is not efficient for this project. If LFS is to be booted from USB, everything can be configured and compiled on a disk and then copied to an USB stick before the grub step and the bootloader can be written to USB at the end.

There are no other requirements than disk in this project. A fast CPU will of course shorten the compile time but you will get the same result on a slow CPU.

There is also no restriction on the OS to be installed on the first disk. Since I feel myself comfortable with RedHat based systems and wanted to try CentOS 8 Stream for a long time, I'll go for it.

VM Setup and Creating Partitions

On CentOS, sshd is enabled by default. I found its IP and connected via SSH because it is easier to copy and paste commands into terminal than to type them on console. If VM has a NAT configuration, you have to configure "port forwarding" to connect to VM. I had mentioned this in one of the previous posts .

My LVM template is, a 512 MB /boot partition at the beginning of the disk and an LVM partition on the rest. In LVM partition, two 4 GB partitions for /var and /home, two 2 GB partitions for /tmp and swap and rest for root partition. Total usage on partitions except root does not exceed 60 MB. As a result 12.5 GB space remains untouched. As I mentioed above, root partition usage can reach 16 GB. Therefore, I added a relatively large disk of 40 GB. When the LFS compilation finished, net size of vmdk disk file was 28.8 GB.

I quickly partitioned the disk with following command:

The result can be checked from the output:

/dev/sdb1 2048 1050623 1048576 512M 83 Linux

/dev/sdb2 1050624 83886079 82835456 39.5G 8e Linux LVM

Then I created partitions in LVM:

sudo vgcreate vg_lfs /dev/sdb2

sudo lvcreate -n lv_var -L 4G vg_lfs

sudo lvcreate -n lv_home -L 4G vg_lfs

sudo lvcreate -n lv_swap -L 2G vg_lfs

sudo lvcreate -n lv_tmp -L 2G vg_lfs

sudo lvcreate -n lv_root -l100%FREE vg_lfs

sudo lvscan

In the output of last command, I should see both new and existing partitions. Partitions need to be formatted after they are created:

sudo mkfs.ext4 /dev/vg_lfs/lv_root

sudo mkfs.ext4 /dev/vg_lfs/lv_tmp

sudo mkfs.ext4 /dev/vg_lfs/lv_home

sudo mkfs.ext4 /dev/vg_lfs/lv_var

sudo mkswap /dev/vg_lfs/lv_swap

The book of LFS assumes ext4 FS is used during the installation (section 2.5).

Let's Create LFS Work Environment

When I saved and ran the script in section 2.2, only python3 and makeinfo were not found. I installed python3 with sudo dnf install python3 command. I will come to the latter package later.

I created an LFS env. variable with export LFS=/mnt/lfs command and added this to .bash_profile as well (section 2.6). I also created this directory. Now, I need to mount partitions (section 2.7) but I wrote a script, to not manually mount all four partitions:

if [[ x$LFS == "x" ]]; then

echo '$LFS' variable is empty.

exit 1

fi

STEP=1

for PARTITION in "/" "var" "home" "tmp"; do

if [[ $PARTITION == "/" ]]; then

LVMNAME="root";

else

LVMNAME=$PARTITION;

fi

echo "[ $STEP / 5 ] Mounting $LVMNAME partition"

if [ ! -d "$LFS/$PARTITION" ]; then

sudo mkdir -pv "$LFS/$PARTITION";

sudo chown $USER:$GROUPS "$LFS/$PARTITION";

fi

sudo mount "/dev/vg_lfs/lv_$LVMNAME" $LFS/$PARTITION

sudo chown $USER:$GROUPS "$LFS/$PARTITION";

STEP=$((STEP+1))

done

echo "[ 5 / 5 ] Activating swap.."

sudo swapon /dev/vg_lfs/lv_swap 2> /dev/null

If this script is called by just a single user, $USER and $GROUPS variables can be substituted with the username and group name. And swap doesn't necessarily need to be activated but I did it nevertheless.

LFS Packages

When I mentioned "package", .rpm or .deb files should not be understood. These are source code packages. Before downloading them, I need some additional software, i.e. wget, vim-enhanced and makeinfo, which I previously skipped. makeinfo comes in texinfo package but its repository "powertools" is disabled. So, I installed with following command:

Then I created "sources" directory (section 3.1), downloaded the files in this directory using wget list and checked their hashes. If there are some problems with download, you can give --no-check-certificate parameter to wget.

I switched to root to run the commands in section 4.2, but first I exported LFS variable again, for root (because I had first exported it for normal user). Then I ran commands. I don't need to create an lfs user (4.3) since I am in a VM. I changed the ownership of the directories to my normal user. Then I saved the given .bashrc to my home directory (not root) with the name "lfs_env.sh" and loaded it with source command. If I reboot the VM, I will run this again.

I followed the fifth and sixth chapters exactly as they are. I ran the commands in section 7.2, up to 7.3.2. Since next commands (starting from section 7.3.3) will run on each entry to the chroot env. and the mounted resources must be unmounted in reverse order on exit, I created a script from the commands:

if [[ x$LFS == "x" ]]; then

echo '$LFS' variable is empty.

exit 1

fi

mount -v --bind /dev $LFS/dev

mount -v --bind /dev/pts $LFS/dev/pts

mount -vt proc proc $LFS/proc

mount -vt sysfs sysfs $LFS/sys

mount -vt tmpfs tmpfs $LFS/run

if [ -h $LFS/dev/shm ]; then

mkdir -pv $LFS/$(readlink $LFS/dev/shm)

fi

chroot "$LFS" /usr/bin/env -i HOME=/root TERM="$TERM" PS1='(lfs chroot) \u:\w\$ ' PATH=/bin:/usr/bin:/sbin:/usr/sbin /bin/bash --login +h

umount -v $LFS/run

umount -v $LFS/sys

umount -v $LFS/proc

umount -v $LFS/dev/pts

umount -v $LFS/dev

If /dev/pts is not unmounted while exiting chroot, a new terminal cannot be opened in VM and VM needs to be restarted.

I entered chroot and continued to create necessary files and directories. Btw, /etc/passwd and /etc/group files in section 7.6 are the first point where systemd and sysVinit differ.

Since the script does unmount the resources when exiting chroot, the unmount commands in section 7.14 are not needed anymore. And I also can create a VM snapshot for backup, so the rest is also not needed.

In section 8.25, while compiling shadow with cracklib support, I got "undefined reference to `FascistCheck'" error. I reconfigured with following command and then the compilation succeeded:

The "make -k check" step in section 8.26 takes so long, that I started the test before going to bed and it was still incomplete when I woke up. From my understanding, there are more than 350K tests and some of them are stress tests. It is also written in this section that some tests are known to fail. Test results are available here. My results were almost the same as these. There is very simple sanity chech at the end of the section. IMHO, just doing this test would be enough but the book considers "make check" step as critical and not to be skipped.

In section 8.69, systemd and sysVinit packages are getting significantly different.

At the end of chapter 8, I removed the +h parameter that I gave to bash, in lfs_enter_chroot.sh script and saved it.

The ninth chapter handles initd/systemd settings. This means these two chapters are completely different in each version. In this chapter, I followed the book exactly. I entered KEYMAP=trq in /etc/sysconfig/console for initd or in /etc/vconsole.conf for systemd for setting Turkish keyboard layout. If there is no KEYMAP set, English layout is loaded by default. I skipped section 9.10.3 of systemd book because I have a separate partition for /tmp.

Section 10.2 is very important because fstab be created. Here, I have to add all partitions, I created in the beginning, into fstab. /boot partition is currently on /dev/sdb1 because it is still on the second disk. But when I detach the first disk from the VM to boot from LFS, this partition will become /dev/sda1. Hence I cannot use this device name. Each disk under linux, has a unique and fixed UUID. I have to use this, so that /boot can always be mounted regardless it is on first or second disk. The value linked to /dev/sdb1 in ls -la /dev/disk/by-uuid output is the UUID, I need. Or using,

command, I list the disks and their UUIDs. lsblk output is more verbose but UUID column is empty in chroot environment. Therefore, I ran the command outside of chroot (in VM), noted the value down and created fstab with this value:

/dev/mapper/vg_lfs-lv_var /var ext4 defaults 0 0

/dev/mapper/vg_lfs-lv_home /home ext4 defaults 0 0

/dev/mapper/vg_lfs-lv_tmp /tmp ext4 defaults 0 0

/dev/mapper/vg_lfs-lv_swap swap swap pri=1 0 0

UUID=01234567-89ab-cdef-0123-456789abcdef /boot ext4 defaults 0 0

and for initd version; proc, sysfs, devpts etc. entries must be added as well. I have not included them here. These are already in the book.

Compiling the Linux Kernel

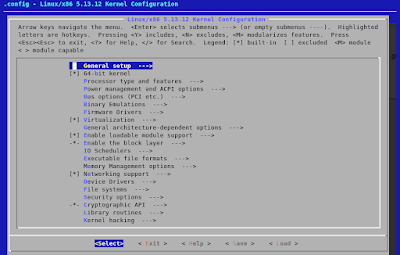

After compiling all packages, it comes to compiling the kernel. Section 10.3 of both versions is about compiling the kernel. I created a default configuration by running make mrproper and make defconfig. I have explained these commands in detail in my previous article. I will use make menuconfig to select other features.

| |

| make menuconfig TUI |

|

| systemd features |

It is mentioned in Systemd's Errata, that CONFIG_SECCOMP is not under "Processor type and features" submenu, but that's OK, because this feature comes on in defconfig. It can still be searched in menuconfig or found in .config:

687:CONFIG_HAVE_ARCH_SECCOMP=y

688:CONFIG_HAVE_ARCH_SECCOMP_FILTER=y

689:CONFIG_SECCOMP=y

690:CONFIG_SECCOMP_FILTER=y

691:# CONFIG_SECCOMP_CACHE_DEBUG is not set

Btw, this is just my personal opinion, but I prefer to keep such errors to myself, as I think, some people in LFS support channels are not friendly at all, based on my own experience in IRC and their mailing list archive.

Back to the topic. My goal is installing LFS with LVM. LFS does contain the bare minimum, for example it doesn't have a window manager. LVM is also not counted as basic and it is a part of another project called (B)eyond LFS or BLFS in short. In LVM section of the BLFS book, there is another list of features that needs to be enabled for LVM support. I will enable them and include the modular ones to kernel (just personal preference). In the meantime, I looked at the Gentoo documentation for LVM and enabled a few more features that are not mentioned in BLFS but recommended by Gentoo. Instead of recompiling the kernel because of missing features, I prefer to boot with a few KBs larger kernel.

Finally, the readers working with vmware have to activate "Fusion MPT device support" as well, which is the main topic of the previous article. This is basically the driver of SCSI controller in vmware and without it, the kernel cannot find the hard disk and cannot boot. This feature is under "Device Drivers" submenu.After completing all these steps, I went back to LFS section 10.3.1, compiled the kernel with make and then installed the modules. Since my /boot partition is on /dev/sdb1, mounted it with mount /dev/sdb1 /boot command and copied vmlinuz (kernel), System.map and config files there.

GRUB Bootloader

After compiling the kernel and copying it to /boot, now it's time to set up GRUB. At the moment, I have two options: I can install GRUB on /dev/sdb (actually, this was my original plan) or I can add LFS to CentOS's existing GRUB on /dev/sda. The advantage of the first option is that the disk is configured to run independently of CentOS. The advantage of the latter is that it is easy to set up.

First, I will configure first option: I ran grub-install /dev/sdb command. grub.cfg file is slightly different than the one in the book:

set timeout=5

insmod ext2

set root=(hd0,1)

menuentry "GNU/Linux, Linux 5.13.12-lfs-11.0-systemd" {

linux /vmlinuz-5.13.12-lfs-11.0-systemd root=/dev/vg_lfs/lv_root ro

}

With "set root" keyword, GRUB's root device is set to first partition of zeroth disk. From GRUB's point of view, LFS disk is not zeroth yet, but it will be when CentOS disk is detached. There is no need to specify paths prefixed with "/boot" because /boot partition is separated. The root argument, given to the kernel is the device path of the root partition. Btw, the configuration above is for systemd. For initd, the "menuentry" and "linux" lines will not have "-systemd", that's all.

I exited chroot env. after saving this. Then I shut the VM down and removed its first disk. When I powered the VM up again, I saw GRUB menu, I just created and got kernel panic while trying to boot with this entry: Yaay!. OK, it's not something to be happy about it but this indicates two things: (1) grub is set up correctly, (2) kernel is properly compiled and copied to /boot.

So, why did I get a kernel panic, then? As seen in call trace, in mount_block_root function, kernel could not find the disk (specified with root= in GRUB) to mount to root directory. Why? Because LVM has not been activated yet. Unfortunately, there is nothing to do here, so I added virtual disk back and returned to CentOS.

Do I have to remove the disk to boot to LFS and add it back to boot to CentOS when any problem occurs? Hell, no! I appended following lines to /etc/grub.d/40_custom in CentOS:

set root=(hd1,1)

linux /vmlinuz-5.13.12-lfs-11.0-systemd root=/dev/mapper/vg_lfs-lv_root ro

}

This is my second GRUB configuration option which I mentioned above. It is essentially the same configuration except "set root" keyword is in menuentry block. This snippet is for systemd again and there will be no "-systemd" part for initd. Then I transferred the line I added into CentOS' grub.cfg:

OS prober is a very nice feature to find other OSes installed and to add them to grup automatically but it doesn't work well due to a bug. Now, it is easier to switch between OSes, so I can continue from where I left off.

initramfs

I opened LVM section (or systemd LVM section) in BLFS book. The second to last paragraph of About LVM says, that an initramfs is required to use LVM on root file system. initramfs, is a compressed virtual disk, containing some basic programs and configs. Acronym for 'Initial RAM Filesystem'. If this file exists, it is unpacked to root directory by bootloader and the programs and configs in it do the necessary operations for the system to continue to boot. "Rescue kernel", which is coming with many distros, is actually a simple initramfs containing a shell.

BLFS has its own initramfs creation script. To add LVM support to initramfs, first LVM must be installed. And for this;

1) First libaio, which is the prerequisite of LVM

2) which is actually not for LVM but it's a very useful utility for troubleshooting

3) mdadm. Its test can be skipped, the command doesn't even run the tests.*

4) cpio to compress initramfs

5) LVM. Its tests also take long time and some of them are even problematic. I configured LVM with --with-thin* ve --with-cache* parameters, given in the book as well as --with-vdo=none parameter. There is an extra command in systemd LVM though it's not critical.

6) and initramfs script must be installed.

* I haven't tested but LVM should also work without mdadm.

mkinitramfs script consists of two parts. The first part is the script itself and the second part is the file named init.in, which will be copied to the initramfs file by the script. In LFS v10.1, this script had a bug. It was searching for coreutils and util-linux components (like ls, cp, mount, umount etc), which are essential for initramfs, in /bin instead of /usr/bin and in /lib instead of /usr/lib. The script was ending with an error. As a workaround, I had linked missing files to where they should be in /usr/bin and /usr/lib. This bug is fixed in v11.

Since I gave kernel version as a parameter to the script, it added kernel modules (.ko files) to initramfs and created it.

Creating initrd.img-5.13.12... done.

I copied the file to /boot (the partition must be mounted first) and changed /boot/grub/grub.cfg as follows before exiting the chroot:

linux /vmlinuz-5.13.12-lfs-11.0-systemd root=/dev/vg_lfs/lv_root ro

initrd /initrd.img-5.13.12

}

The configuration I made above will only work when CentOS disk is removed. I am actually using CentOS' GRUB. So, after exiting chroot, I added the same initrd line to /etc/grub.d/40_custom and rerun this command:

I restarted the VM, chose LFS and voilà:

and same result for systemd version:

Although, some services are still failing on systemd machine, they both are booting without any problem in general.